The Architecture Shift: From Monolithic AI to Orchestrated Ecosystems

Why Enterprise AI Is Moving Beyond the “One Smart Assistant” Myth

For years, enterprise AI followed a deceptively simple idea:

Build one powerful AI assistant and let it handle everything.

On paper, it sounded efficient. In practice, it failed at scale.

At Versalence AI, most of the AI breakdowns we’re called in to fix stem from this exact assumption. As workflows grow cross-functional, regulated, and system-heavy, monolithic AI collapses under its own ambition.

This blog explains why that happens, what replaces it, and how Versalence AI implements orchestrated, agentic ecosystems in the real world.

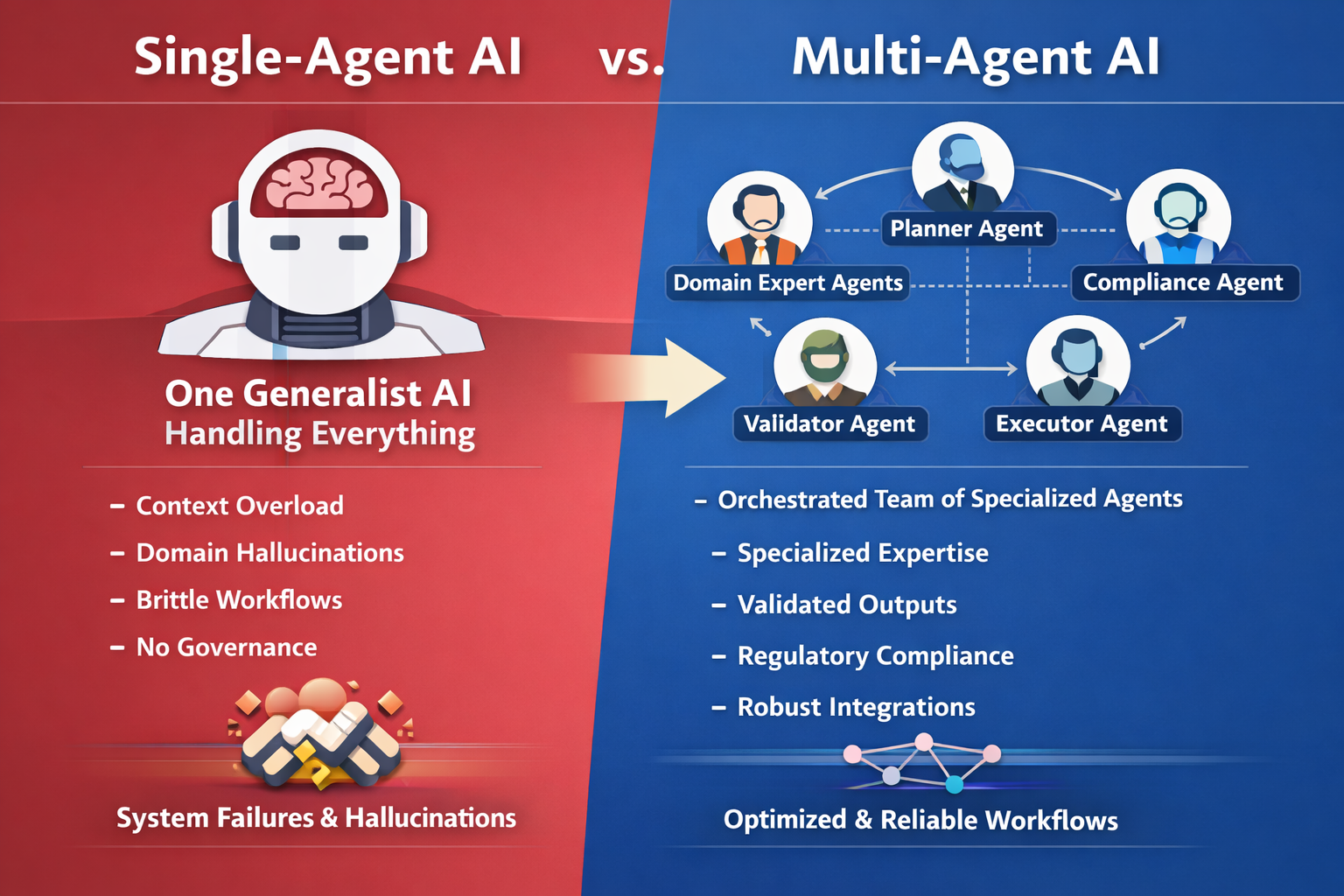

The Core Problem with Single-Agent AI

Traditional enterprise AI deployments rely on a single, general-purpose LLM attempting to:

-

Understand business context

-

Retrieve knowledge

-

Reason across domains

-

Enforce compliance

-

Execute actions across systems

This creates five structural failure modes:

1. Context Window Saturation

As workflows expand, prompts become overloaded with:

-

Business rules

-

Policy text

-

Data payloads

-

Historical context

The result: lost intent, partial reasoning, or silent truncation.

2. Domain Hallucination

A generalist model is never truly expert in:

-

Compliance

-

Finance

-

Healthcare

-

Legal

-

Engineering

It sounds confident—but lacks grounded guarantees.

3. Brittle End-to-End Flows

When one agent owns everything:

-

A single misinterpretation breaks the entire workflow

-

Recovery logic becomes nearly impossible

-

Debugging turns opaque

4. No Separation of Responsibility

Who failed?

-

Reasoning?

-

Retrieval?

-

Validation?

-

Execution?

With monolithic AI, failure has no owner.

5. Poor Enterprise Governance

Audit trails, approvals, and policy enforcement are afterthoughts—bolted on, not designed in.

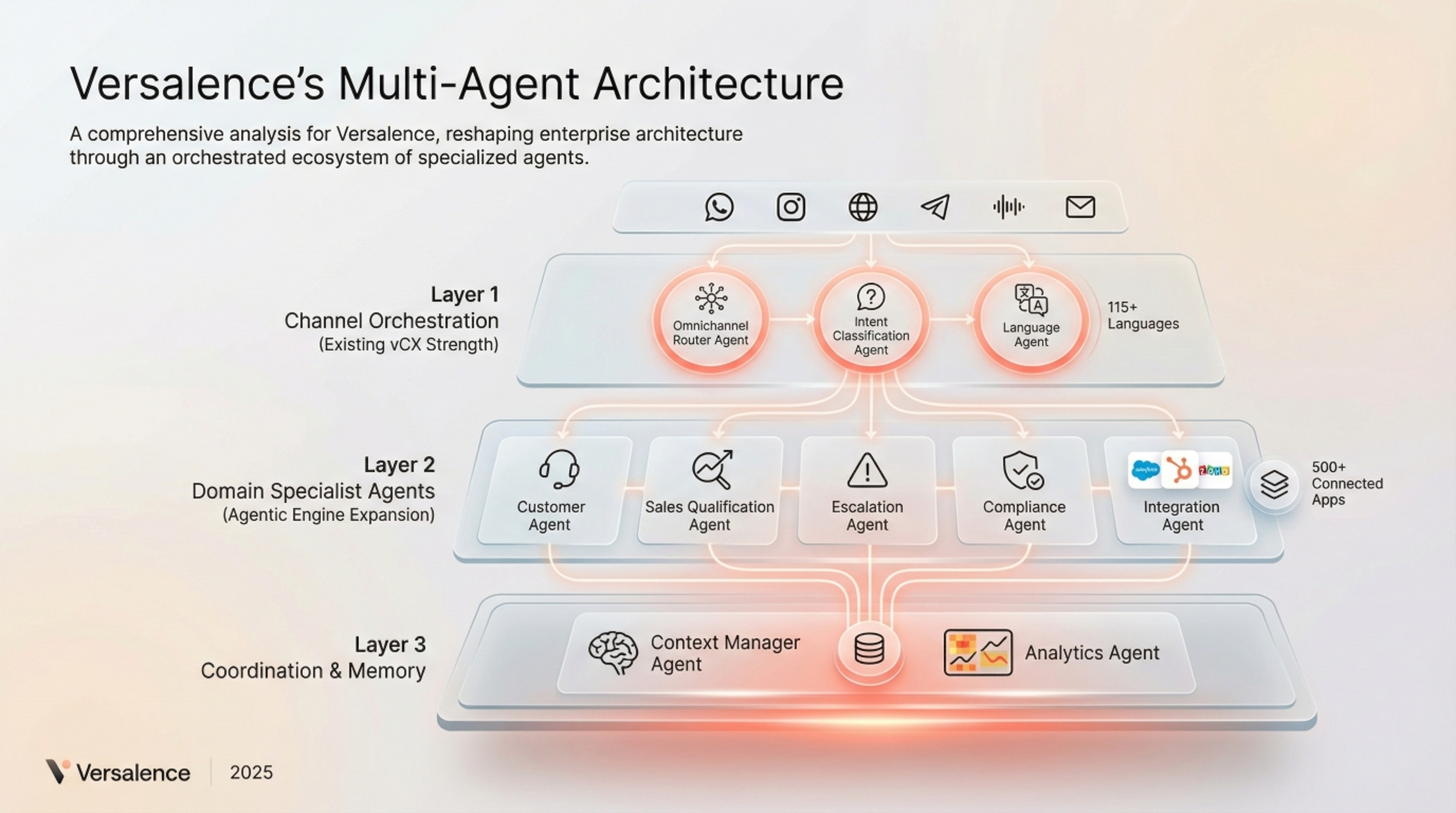

The Multi-Agent Shift: AI as an Orchestrated Team

Enterprises are now re-architecting AI the same way they design human organizations:

Clear roles. Clear responsibilities. Clear handoffs.

Instead of one agent doing everything, multiple specialized agents collaborate under orchestration.

At Versalence, this is what we call Agentic AI.

The Canonical Enterprise Agent Stack

Below is the reference architecture we deploy across regulated, high-scale environments.

🧠 Planner Agent

Role: Strategic decomposition

-

Interprets user intent

-

Breaks complex requests into atomic tasks

-

Determines execution order and dependencies

Think of this as your AI “Program Manager”.

🎯 Domain Specialist Agents

Role: Depth over breadth

Each agent is optimized for a narrow function:

-

Risk assessment

-

Knowledge retrieval (RAG)

-

Calculations & scoring

-

Policy interpretation

These agents:

-

Use domain-specific prompts

-

Query curated data sources

-

Run on fit-for-purpose models

✅ Validator Agent

Role: Quality assurance

-

Verifies factual correctness

-

Cross-checks outputs against source data

-

Flags uncertainty or low confidence

No response moves forward without validation.

🛡 Compliance Agent

Role: Governance & auditability

-

Enforces regulatory rules (HIPAA, SOC2, GDPR, etc.)

-

Ensures data handling constraints

-

Generates structured audit logs

This agent is non-negotiable in enterprise deployments.

⚙ Executor Agent

Role: Action & integration

-

Interfaces with ERP, CRM, ticketing, payment gateways

-

Executes approved actions only

-

Reports success/failure states back to the system

Reasoning never directly touches production systems—execution is gated.

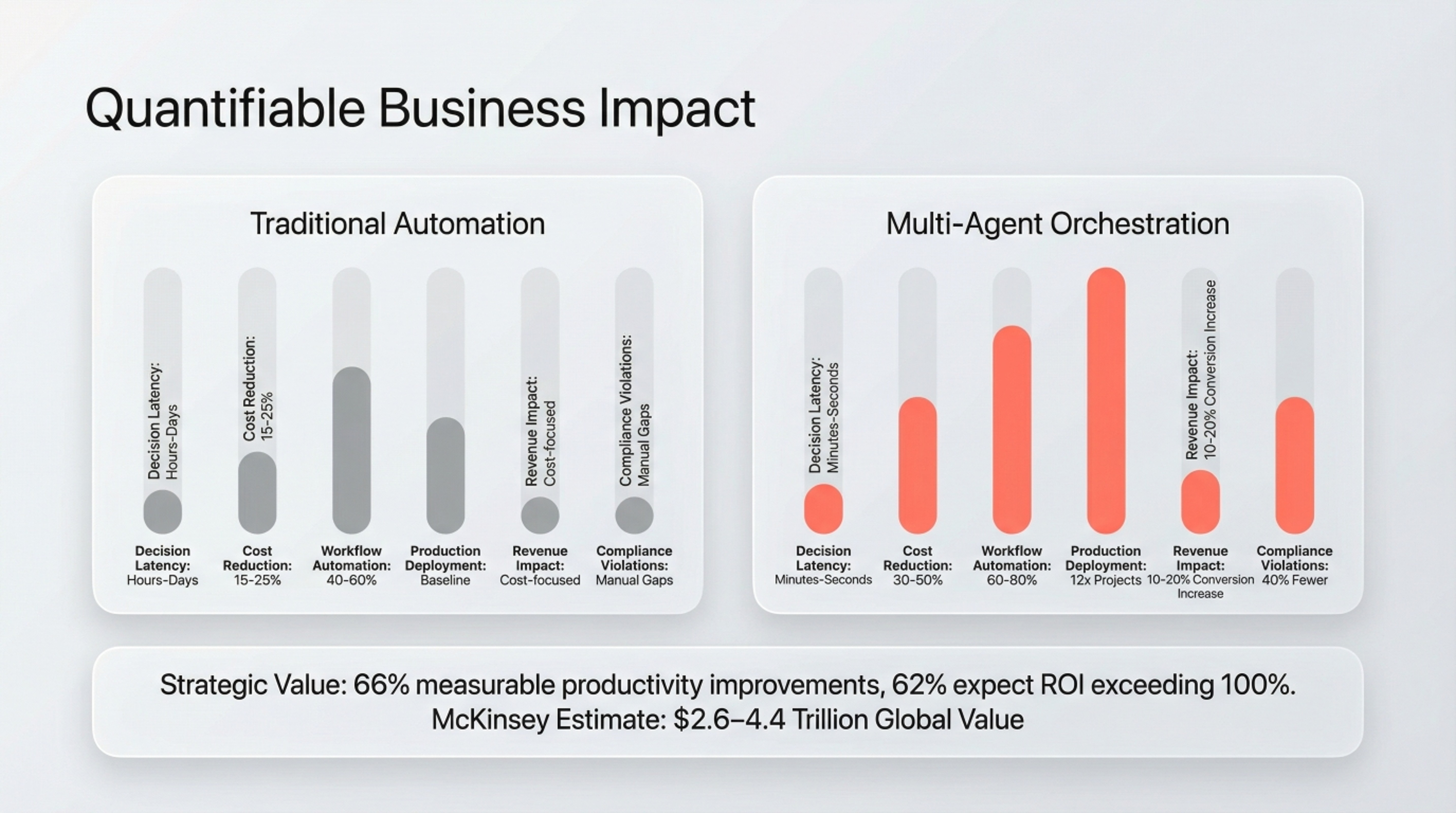

Why This Architecture Actually Works

The shift isn’t theoretical—it’s measurable.

📊 Industry Signal (2025)

-

Organizations using multi-agent workflows on Databricks grew 327% in just four months (June–October 2025)

-

78% of enterprises now use two or more LLM families

-

Smaller models → routine, low-risk tasks

-

Frontier models → high-stakes reasoning

-

This confirms what we see in practice:

Model orchestration beats model size.

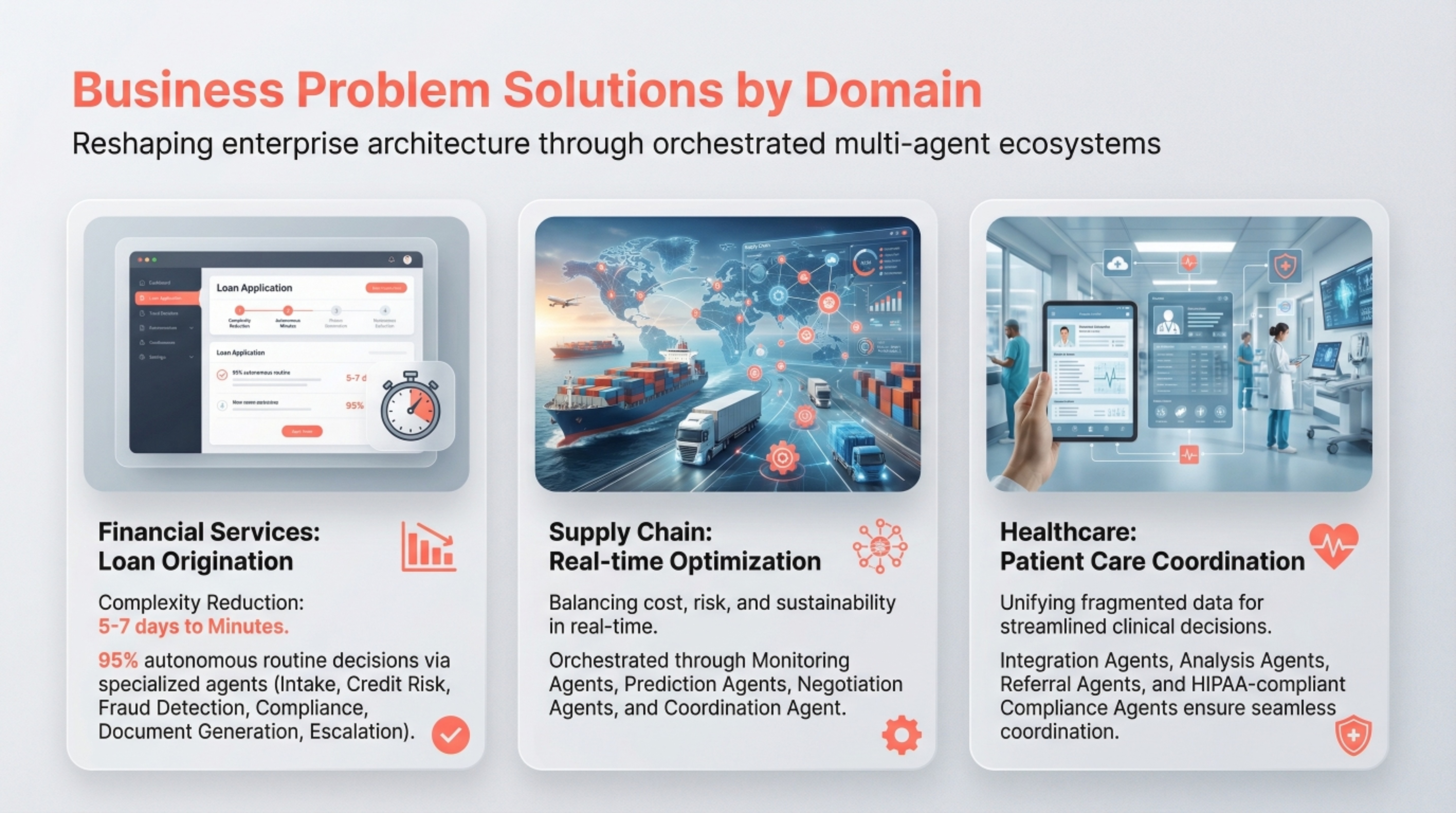

How Versalence AI Implements This in Practice

We don’t “add agents for complexity.”

We design outcome-driven agent ecosystems.

1️⃣ Outcome-First Architecture

We start with business outcomes:

-

Reduce inbound workload by 60%

-

Cut TAT from days to minutes

-

Enforce compliance without manual review

Agents are mapped only where they add leverage.

2️⃣ Model Routing, Not Model Worship

We deliberately mix:

-

Lightweight models for classification & routing

-

Advanced models for planning & reasoning

This reduces:

-

Cost

-

Latency

-

Risk

3️⃣ Built-In Governance (Not Retrofits)

Every Versalence deployment includes:

-

Validation checkpoints

-

Compliance gates

-

Full traceability across agent decisions

No black boxes. No “AI said so.”

4️⃣ vCX as the Control Plane

Our vCX – the Social CRM acts as:

-

Conversation memory layer

-

Agent orchestration hub

-

Human-in-the-loop escalation point

This ensures AI integrates into existing operations, not alongside them.

From Assistants to Ecosystems

The future of enterprise AI is not:

-

A smarter chatbot

-

A larger context window

-

A single “super model”

It is orchestrated intelligence:

-

Modular

-

Governed

-

Auditable

-

Scalable

At Versalence AI, we design AI systems the way enterprises actually run:

As coordinated teams—not lone geniuses.